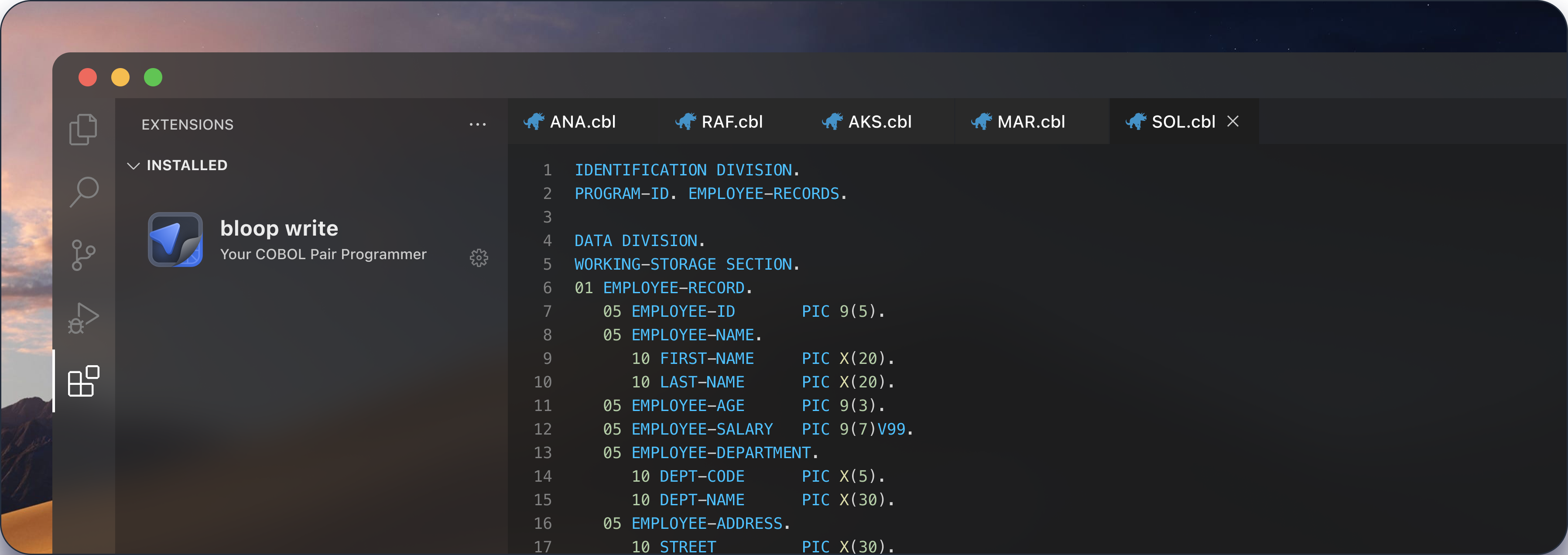

The AI Pair Programmer That Writes COBOL

bloop write is a research project to train large language models that can write COBOL

Auto-complete

Auto-complete completes the line of code you’re writing, in your IDE, taking into account the context of code above and below.

Runs offline

The VS Code extension, including the AI model, runs entirely offline on your device, so your code isn’t shared with us or any 3rd parties.

Open source

All code used to train our models is open source. Your code never leaves your device, so it’s never used for training.

Understands your program

Code above and below the working line is visible to the model, so that relevant variables and paragraphs can be prioritised.

Handles COBOL-specific syntax

The model has been trained to understand the semantics of COBOL, like the working storage section.

Frequently asked questions

Extremely! We set ourselves the challenge of seeing whether it was possible to train a model that can understand and write COBOL, given the extremely limited amount of open source COBOL available.

Our first release demonstrates that it is possible, and lays the foundations for continued improvement and specialisation.

Outputs from the model are unstable, should be used for research evaluation only and not used in production.

bloop write is free to use.

All generated code is free for commercial and non-commercial use and licensed under The MIT License.

No.

AI suggestions are generated locally on your machine, so the suggestions aren't shared with us or any third parties, and neither is any of the code you're working on.

As AI suggestions are generated locally on your machine, it's important that you have sufficient hardware and storage space available.

You'll need 4GB free on your hard drive to download the model.

You should have at least 8GB RAM to run the AI model.

For best performance, run on a system with a GPU with at least 8GB VRAM.

The model is trained on:

- Any data that was in the base model, code-llama

- Open source code gathered from the internet

- Synthetic code, generated by large language models

Still have questions?